Deploying S3 static site with AWS CDK pipeline

Published on 3/6/2024

Welcome to this tutorial on deploying an S3 static site with CDK pipeline!

In this tutorial, you will learn how to set up a CDK pipeline to deploy a static site to Amazon S3. We will cover the entire process, from creating the necessary infrastructure using AWS CDK to automating the deployment using codepipeline. You can find the complete code on github here

Let's get started!

Folder Structure

We will create a monorepo with the following folder structure:

static-site/

├── app/

├── infra/

The app folder will be a simple VITE application, and the infra folder will contain the CDK code to create the necessary infrastructure.

VITE application

After creating the folder, cd into the app folder and run the following command to create a new VITE application:

pnpm create viteFeel free to use npm or yarn if you prefer.

To learn more about VITE, you can check out the official documentation.

AWS CDK infrastructure

This tutorial assumes you have AWS installed and configured on your local machine. If not, you can follow the instructions here to install and configure the AWS CLI.

Make sure the the configured profile has permissions to provision AWS resources. to make things simple the profile I used had an AministratorAccess policy attached to it.

Lets install aws-cdk:

pnpm install -g aws-cdkVerify the installation by running the following command:

cdk --versionBootstrap the CDK environment:

AWS CDK requires certain resources and permissions to be able to deploy apps for you. for example, S3 buckets to store build artifacts and iam executation roles.

Let's bootstrap the CDK environment by running the following command:

cdk bootstrapLearn more about bootstrapping here

Create a new CDK project

Lets cd into the infra folder and run the following command to create a new CDK project:

cdk init app --language typescriptThis will create a new CDK project with the following folder structure:

infra/

├── bin/

├── lib/

├── test/

├── cdk.json

├── jest.config.js

├── package.json

├── README.md

├── tsconfig.json

Before we continue, let's cleanup some boilerplate code that we don't need. Open the lib/infra-stack.ts file and remove the HelloCdkStack class and the HelloCdkStackProps interface.

import { Construct } from 'constructs';

import {

Stack,

StackProps

} from 'aws-cdk-lib';

export class InfraStack extends Stack {

constructor(scope: Construct, id: string, props?: StackProps) {

super(scope, id, props);

//...

}

}We're going to use the aws_codepipeline construct to create our pipeline. the pipeline will have 3 stages:

- Source stage -> getting the source code from github

- Build stage -> building the VITE application inside a codebuild project

- Deploy stage -> deploying the built application to an S3 bucket with a cloudfront distribution in front of it.

Stage 1: Source

We will use github as our source provider. To do this, we need to create a new GitHubSourceAction

import { Construct } from 'constructs';

import {

Stack,

StackProps,

SecretValue,

aws_codepipeline as codepipeline,

aws_codepipeline_actions as codepipelineActions,

} from 'aws-cdk-lib';

export class InfraStack extends Stack {

constructor(scope: Construct, id: string, props?: StackProps) {

super(scope, id, props);

const sourceOutput = new codepipeline.Artifact()

const sourceAction = new codepipelineActions.GitHubSourceAction({

actionName: 'GithubSource',

owner: 'mactunechy',

repo: 'cdk-s3-static-site-hosting',

oauthToken: SecretValue.secretsManager('github_token2'),

output: sourceOutput,

branch: 'master'

})

}

}Make use you have created the monorepo on github and use that information in the GitHubSourceAction constructor.

Setting up the github token

In your github account create an access token with the following permissions:

- repo

- admin:repo_hook

Learn more about creating a github token here

In the AWS console, navigate to AWS Secret Manager service and store a plaintext secret. I named mine github_token2, you can name yours whatever you want.

We will use this secret to authenticate with github in the GitHubSourceAction constructor.

Stage 2: Build

We will use AWS CodeBuild service to build our Vite application into a production ready bundle. the bundle will be outputed by the build action as an artifact

and is stored in an artifacts s3 bucket.

import { Construct } from 'constructs';

import {

Stack,

StackProps,

SecretValue,

aws_codepipeline as codepipeline,

aws_codepipeline_actions as codepipelineActions,

aws_codebuild as codebuild,

} from 'aws-cdk-lib';

export class InfraStack extends Stack {

constructor(scope: Construct, id: string, props?: StackProps) {

super(scope, id, props);

const sourceOutput = new codepipeline.Artifact()

const buildOutput = new codepipeline.Artifact()

const sourceAction = new codepipelineActions.GitHubSourceAction({

actionName: 'GithubSource',

owner: 'mactunechy',

repo: 'cdk-s3-static-site-hosting',

oauthToken: SecretValue.secretsManager('github_token2'),

output: sourceOutput,

branch: 'master'

})

const buildAction = new codepipelineActions.CodeBuildAction({

actionName: 'Build',

project: new codebuild.PipelineProject(this, 'ViteSiteBuildProject', {

buildSpec: codebuild.BuildSpec.fromObject({

version: '0.2',

phases: {

install: {

'runtime-versions': {

nodejs: '20.x'

},

commands: [

'cd app',

'npm install pnpm -g',

'pnpm install'

],

},

build: {

commands: ['pnpm run build'],

},

},

artifacts: {

files: ['**/*'],

'base-directory': 'app/dist',

},

}),

environment: {

buildImage: codebuild.LinuxBuildImage.AMAZON_LINUX_2_5,

}

}),

input: sourceOutput,

outputs: [buildOutput],

})

}

}

Stage 3: Deloyment

We will use S3 deployment action to deploy the built application bundle to our s3 bucket. The output of the previous stage will be the input for this stage.

Before we continue with the deploy stage, we need to create s3 bucket and a cloudfront distribution to serve our static site.

import { Construct } from 'constructs';

import {

Stack,

StackProps,

SecretValue,

RemovalPolicy,

aws_codepipeline as codepipeline,

aws_codepipeline_actions as codepipelineActions,

aws_codebuild as codebuild,

aws_s3 as s3,

aws_cloudfront as cloudfront,

aws_cloudfront_origins as cfOrigins,

} from 'aws-cdk-lib';

export class InfraStack extends Stack {

constructor(scope: Construct, id: string, props?: StackProps) {

super(scope, id, props);

const sourceOutput = new codepipeline.Artifact()

const buildOutput = new codepipeline.Artifact()

const sourceAction = new codepipelineActions.GitHubSourceAction({

actionName: 'GithubSource',

owner: 'mactunechy',

repo: 'cdk-s3-static-site-hosting',

oauthToken: SecretValue.secretsManager('github_token2'),

output: sourceOutput,

branch: 'master'

})

const buildAction = new codepipelineActions.CodeBuildAction({

actionName: 'Build',

project: new codebuild.PipelineProject(this, 'ViteSiteBuildProject', {

buildSpec: codebuild.BuildSpec.fromObject({

version: '0.2',

phases: {

install: {

'runtime-versions': {

nodejs: '20.x'

},

commands: [

'cd app',

'npm install pnpm -g',

'pnpm install'

],

},

build: {

commands: ['pnpm run build'],

},

},

artifacts: {

files: ['**/*'],

'base-directory': 'app/dist',

},

}),

environment: {

buildImage: codebuild.LinuxBuildImage.AMAZON_LINUX_2_5,

}

}),

input: sourceOutput,

outputs: [buildOutput],

})

const bucket = new s3.Bucket(this, 'ViteStiteBucket', {

websiteIndexDocument: 'index.html',

removalPolicy: RemovalPolicy.DESTROY,

blockPublicAccess: {

blockPublicAcls: false,

blockPublicPolicy: false,

ignorePublicAcls: false,

restrictPublicBuckets: false,

},

publicReadAccess: true,

})

const viteSiteOAI = new cloudfront.OriginAccessIdentity(this, 'OriginAccessControl', {

comment: 'Vite site OAI'

});

new cloudfront.Distribution(this, 'ViteSiteDistribution', {

defaultBehavior: {

origin: new cfOrigins.S3Origin(bucket, { originAccessIdentity: viteSiteOAI }),

viewerProtocolPolicy: cloudfront.ViewerProtocolPolicy.ALLOW_ALL,

allowedMethods: cloudfront.AllowedMethods.ALLOW_GET_HEAD_OPTIONS

},

errorResponses: [

{

httpStatus: 403,

responsePagePath: '/error.html',

responseHttpStatus: 200

}

],

})

const deployAction = new codepipelineActions.S3DeployAction({

actionName: 'S3Deploy',

input: buildOutput,

bucket

})

}

}Let's walk through the code the added:

S3 bucket

- We create an S3 bucket that we're going to use to host our static site. We set the

websiteIndexDocumenttoindex.htmlto enable static website hosting. - We add a policy statement to allow public read access to the bucket.

Cloudfront distribution

viteSidteOAIis an identity used to by the cloudfront distribution to access the s3 bucket objects securely. and forwards them to the requester. Learn more more about cloudfront OAI here- We create a new cloudfront distribution with the s3 bucket as the origin. We set the

viewerProtocolPolicytoALLOW_ALLto allow bothhttpandhttpsrequests.

Finally we create a new S3DeployAction to deploy the built application to the s3 bucket.

The pipeline

We integrate everything together by adding the stages to a pipeline

import { Construct } from 'constructs';

import {

Stack,

StackProps,

SecretValue,

aws_codepipeline as codepipeline,

aws_codepipeline_actions as codepipelineActions,

aws_codebuild as codebuild,

aws_s3 as s3,

aws_cloudfront as cloudfront,

aws_cloudfront_origins as cfOrigins,

RemovalPolicy

} from 'aws-cdk-lib';

export class InfraStack extends Stack {

constructor(scope: Construct, id: string, props?: StackProps) {

super(scope, id, props);

const sourceOutput = new codepipeline.Artifact()

const buildOutput = new codepipeline.Artifact()

const sourceAction = new codepipelineActions.GitHubSourceAction({

actionName: 'GithubSource',

owner: 'mactunechy',

repo: 'cdk-s3-static-site-hosting',

oauthToken: SecretValue.secretsManager('github_token2'),

output: sourceOutput,

branch: 'master'

})

const buildAction = new codepipelineActions.CodeBuildAction({

actionName: 'Build',

project: new codebuild.PipelineProject(this, 'ViteSiteBuildProject', {

buildSpec: codebuild.BuildSpec.fromObject({

version: '0.2',

phases: {

install: {

'runtime-versions': {

nodejs: '20.x'

},

commands: [

'cd app',

'npm install pnpm -g',

'pnpm install'

],

},

build: {

commands: ['pnpm run build'],

},

},

artifacts: {

files: ['**/*'],

'base-directory': 'app/dist',

},

}),

environment: {

buildImage: codebuild.LinuxBuildImage.AMAZON_LINUX_2_5,

}

}),

input: sourceOutput,

outputs: [buildOutput],

})

const bucket = new s3.Bucket(this, 'ViteStiteBucket', {

websiteIndexDocument: 'index.html',

removalPolicy: RemovalPolicy.DESTROY,

blockPublicAccess: {

blockPublicAcls: false,

blockPublicPolicy: false,

ignorePublicAcls: false,

restrictPublicBuckets: false,

},

publicReadAccess: true,

})

const viteSiteOAI = new cloudfront.OriginAccessIdentity(this, 'OriginAccessControl', {

comment: 'Vite site OAI'

});

new cloudfront.Distribution(this, 'ViteSiteDistribution', {

defaultBehavior: {

origin: new cfOrigins.S3Origin(bucket, { originAccessIdentity: viteSiteOAI }),

viewerProtocolPolicy: cloudfront.ViewerProtocolPolicy.ALLOW_ALL,

allowedMethods: cloudfront.AllowedMethods.ALLOW_GET_HEAD_OPTIONS

},

errorResponses: [

{

httpStatus: 403,

responsePagePath: '/error.html',

responseHttpStatus: 200

}

],

})

const deployAction = new codepipelineActions.S3DeployAction({

actionName: 'S3Deploy',

input: buildOutput,

bucket

})

const pipeline = new codepipeline.Pipeline(this, 'ViteSitePipeline', {

pipelineName: 'ViteSitePipeline',

// let's save CMK since we're not doing any cross-account deployments

crossAccountKeys: false,

});

pipeline.addStage({

stageName: 'Source',

actions: [sourceAction],

})

pipeline.addStage({

stageName: 'Build',

actions: [buildAction]

})

pipeline.addStage({

stageName: 'Deploy',

actions: [deployAction]

})

}

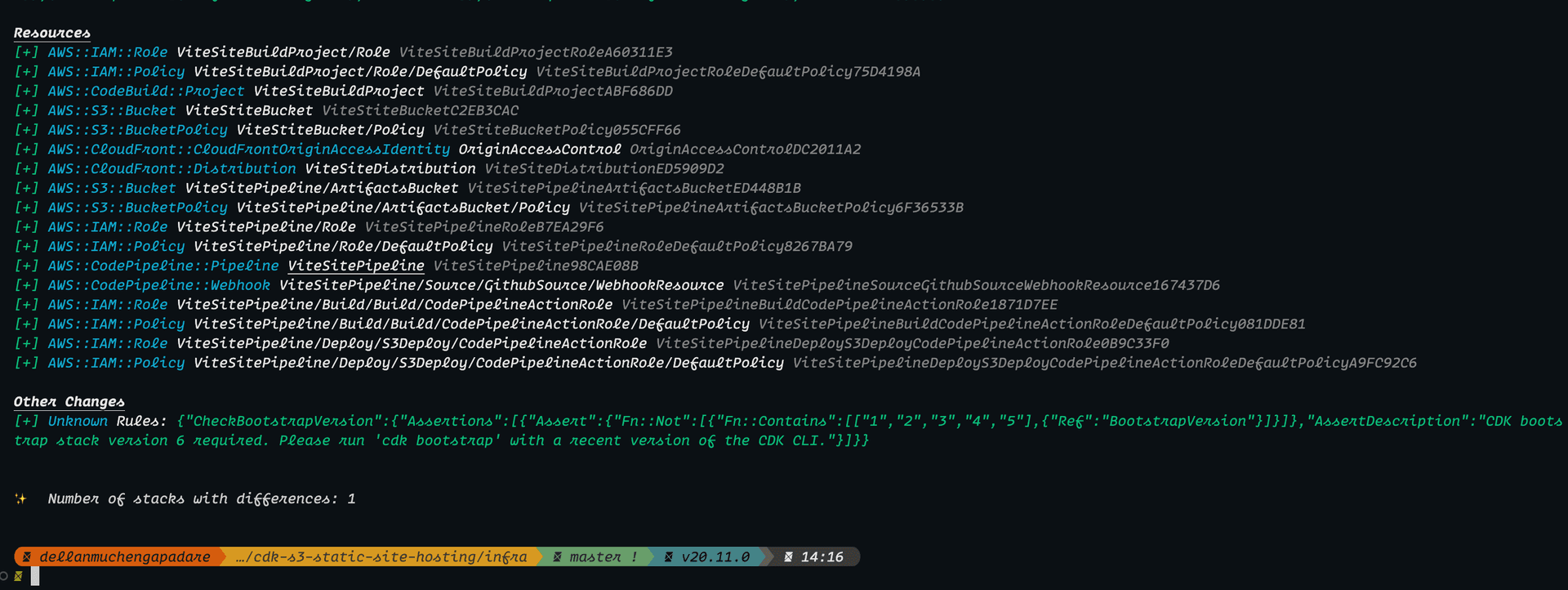

}We're almost done. we can check the resources that are going to be deployed by running the following command:

cdk diff

Push the code to Github and deploy by running:

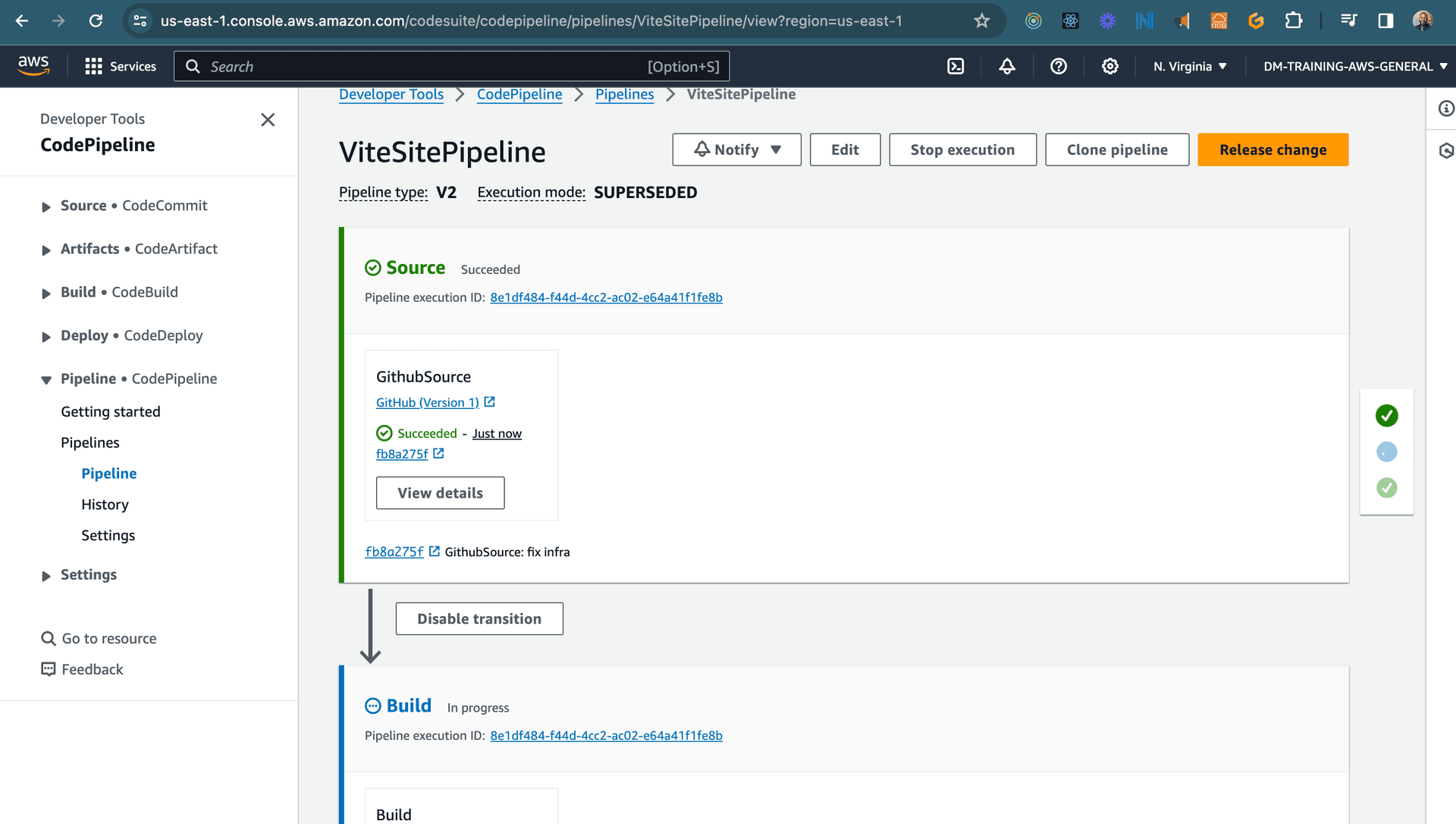

cdk deployOnce it's deployment is complete, the pipeline automatically start running and you should see the stages running in the AWS CodePipeline console.

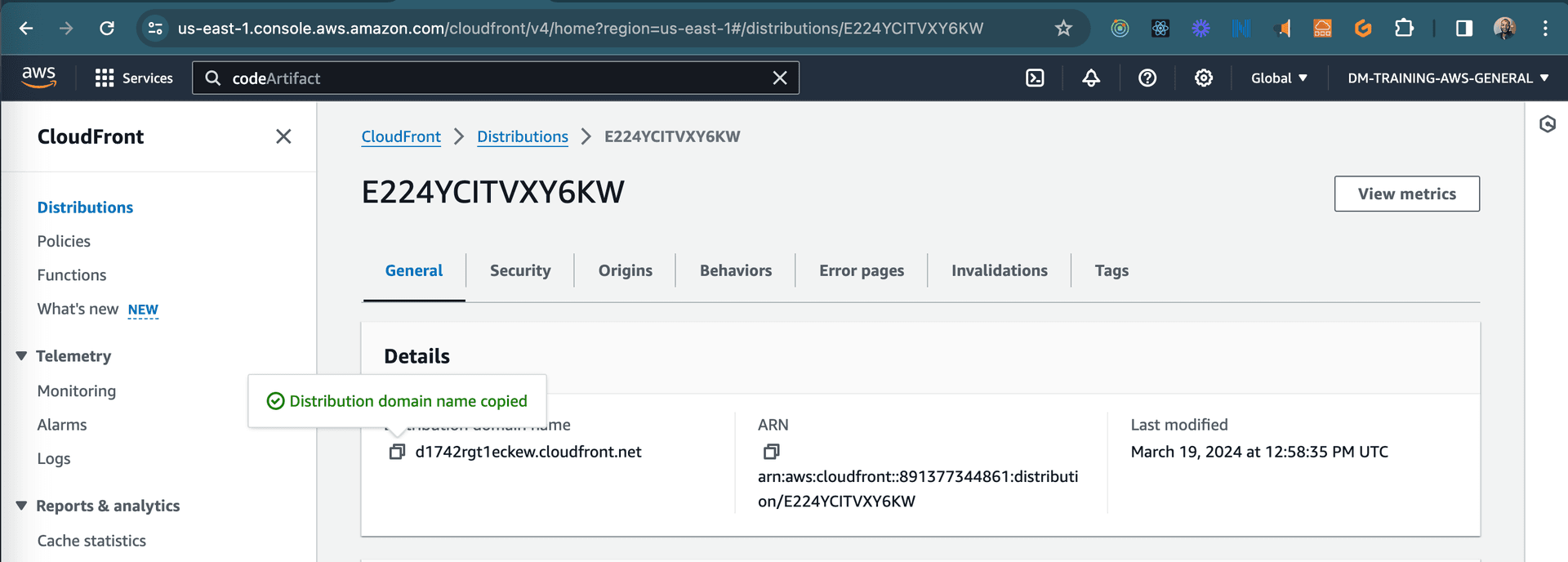

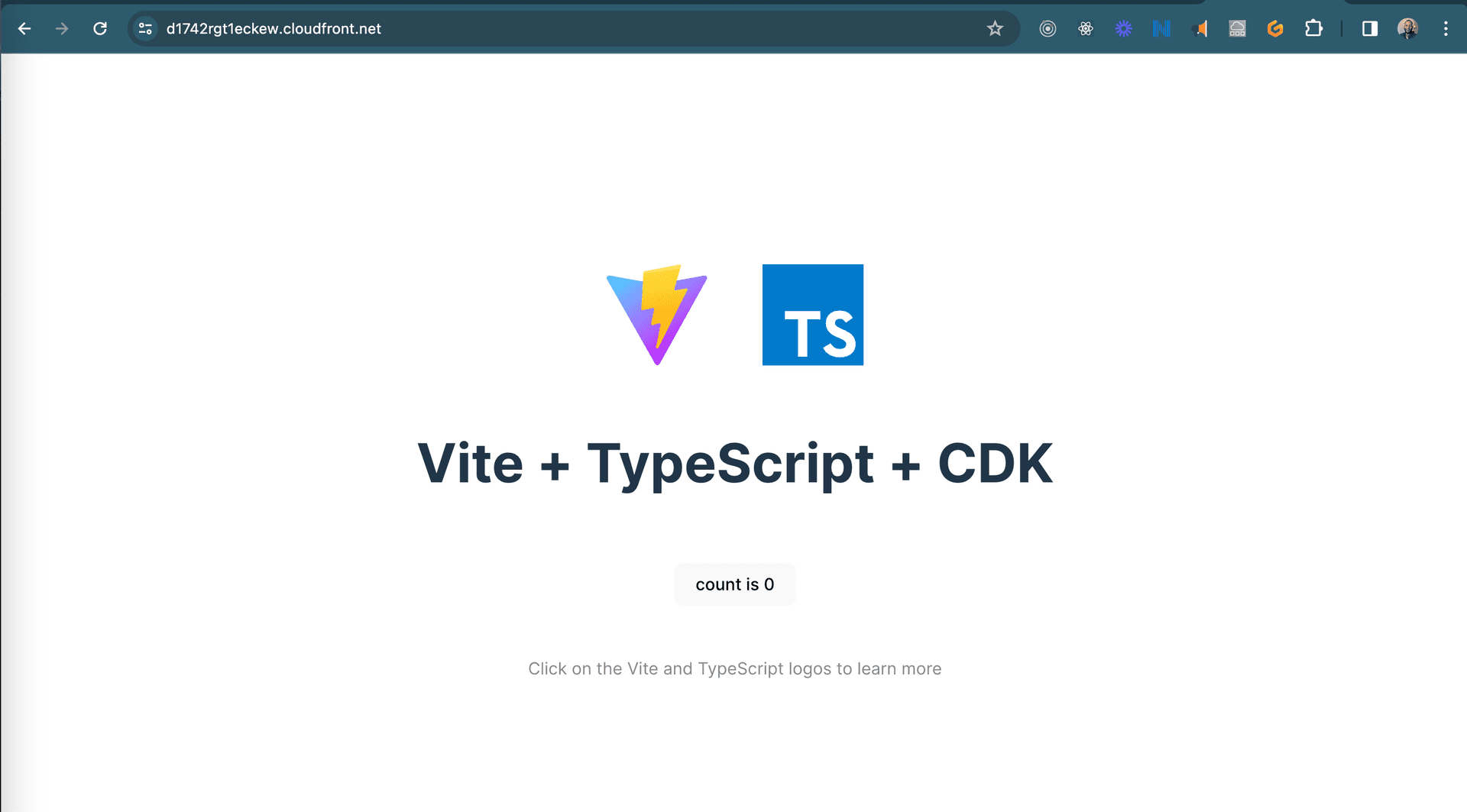

Once the pipeline is complete, you can navigate to the cloudfront distribution domain name to see your static site live.

That's it! You've successfully deployed a static site to Amazon S3 using AWS CDK pipeline.

Improvements

Currently, we can trigger a pipeline run by pushing changes to the master branch. Our site will be updated as expected, but Cloudfront cache will still be stale. We can add a cache validation lambda function to the pipeline to invalidate the cloudfront cache after a successful deployment. Learn more here

We will cover this in a follow up posts. Happy coding!!